Qualitative Analysis

Examination of non-measurable language data.

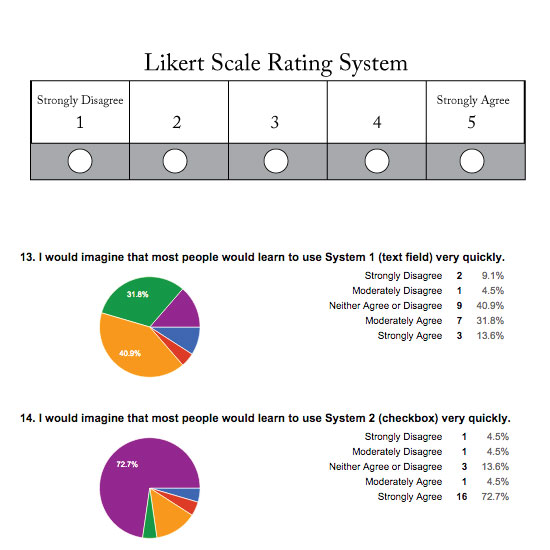

Qualitative Analysis is the examination of non-measurable data. This analysis is used to determine

commonalities amongst user's. This process can occur after an observation, interview or survey.

The qualitative measure is the language the user provided in the observation or followup interview.

This is taken and organized into categories to find commonalities. Once categories have been found,

then a quantitative analysis can occur. e.g. Observing 50 individual completing Task 1, 76% of the

individuals stated they were confused in the followup interview.

Stake holders require quantitative data to formulate their decision. When I conduct a qualitative

analysis, I understand the process of analyzing this data to obtain the quantitative measures that

stake holders will need.

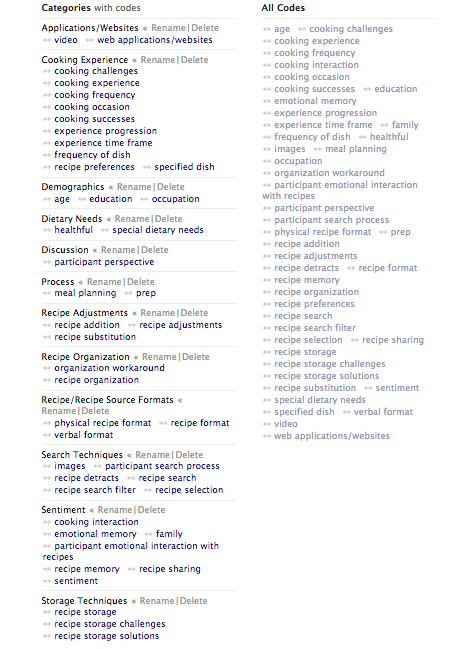

The qualitative analysis coding shows how individuals search and organize their recipies. How is this

information helpful? By analysing the language in the observation and interview, I was able to analyze

if there was a correlation between age and searching for recipes through print or online sources. Since

cooking is in part attributed to an emotional memory or experience, my analysis was able to uncover

that an emotional attachment can be attached to both print and electronic recipes.

What is the benefit of my research? My analysis assists the in the devopment of a website or app, that

will assist in creating an emotional attachment for the user and developing online organization systems for

the recipes. My research also benefits the marketing team in development of online advertising to

create an emotional response to lead the user to the recipe website.